- review \(p\)-values & confidence intervals

- digest \(p\)-problems identified by Wagenmakers (2007)

- brief introduction to Rmarkdown & reproducible research

\[ \definecolor{firebrick}{RGB}{178,34,34} \newcommand{\red}[1]{{\color{firebrick}{#1}}} \] \[ \definecolor{mygray}{RGB}{178,34,34} \newcommand{\mygray}[1]{{\color{mygray}{#1}}} \] \[ \newcommand{\set}[1]{\{#1\}} \] \[ \newcommand{\tuple}[1]{\langle#1\rangle} \] \[\newcommand{\States}{{T}}\] \[\newcommand{\state}{{t}}\] \[\newcommand{\pow}[1]{{\mathcal{P}(#1)}}\]

the \(p\)-value is the probability of observing, under infinite hypothetical repetitions of the same experiment, a less extreme value of a test statistic than that of the oberved data, given that the null hypothesis is true

in the general case, the \(p\)-value of observation \(x\) under null hypothesis \(H_0\), with sample space \(X\), sampling distribution \(P(\cdot \mid H_0) \in \Delta(X)\) and test statistic \(t \colon X \rightarrow \mathbb{R}\) is:

\[ p(x ; H_0, X, P(\cdot \mid H_0), t) = \int_{\left\{ \tilde{x} \in X \ \mid \ t(\tilde{x}) \ge t(x) \right\}} P(\tilde{x} \mid H_0) \ \text{d}\tilde{x}\]

intuitive slogan: probability of at least as extreme outcomes

for an exact test we get:

\[ p(x ; H_0, X, P(\cdot \mid H_0)) = \int_{\left\{ \tilde{x} \in X \ \mid \ P(\tilde{x} \mid H_0) \le P(x \mid H_0) \right\}} P(\tilde{x} \mid H_0) \ \text{d}\tilde{x}\]

intuitive slogan: probability of at least as unlikely outcomes

notation: \(\Delta(X)\) – set of all probability measures over \(X\)

fair coin?

\[ B(k ; n = 24, \theta = 0.5) = \binom{n}{k} \theta^{k} \, (1-\theta)^{n-k} \]

binom.test(7,24)

## ## Exact binomial test ## ## data: 7 and 24 ## number of successes = 7, number of trials = 24, p-value = 0.06391 ## alternative hypothesis: true probability of success is not equal to 0.5 ## 95 percent confidence interval: ## 0.1261521 0.5109478 ## sample estimates: ## probability of success ## 0.2916667

binom.test(7,24)$p.value

## [1] 0.06391466

use a large number of random samples to approximate the solution to a difficult problem

# repeat 24 flips of a fair coin 20,000 times n.samples = 20000 x.reps = map_int(1:n.samples, function(i) sum(sample(x = 0:1, size = 24, replace = T, prob = c(0.5, 0.5)))) ggplot(data.frame(k = x.reps), aes(x = k)) + geom_histogram(binwidth = 1)

x.reps.prob = dbinom(x.reps, 24, 0.5) ## Bernoulli likelihood under H_0 sum(x.reps.prob <= dbinom(7, 24, 0.5)) / n.samples

## [1] 0.0618

p.value.sequence = cumsum(x.reps.prob <= dbinom(7, 24, 0.5)) / 1:n.samples tibble(iteration = 1:n.samples, p.value = cumsum(x.reps.prob <= dbinom(7, 24, 0.5)) / 1:n.samples) %>% ggplot(aes(x = iteration, y = p.value)) + geom_line()

fix a significance level, e.g.: \(0.05\)

we say that a test result is significant iff the \(p\)-value is below the pre-determined significance level

we reject the null hypothesis in case of significant test results

the significance level thereby determines the \(\alpha\)-error of falsely rejecting the null hypothesis

let \(H_0^{ \theta = z}\) be the null hypothesis that assumes that parameter \(\theta = z\)

fix sampling distribution \(P(\cdot \mid H_0^{ \theta = z})\) and test statistic \(t\) as before

the level \((1 - \alpha)\) confidence interval for outcome \(x\) is the biggest interval \([a, b]\) such that:

\[ p(x ; H_0^{\theta = z}) > \alpha \ \ \ \text{, for all } z \in [a;b]\]

intuitive slogan: range of values that we would not reject

Wagenmakers (2007)

fair coin?

\[ B(k ; n = 24, \theta = 0.5) = \binom{n}{k} \theta^{k} \, (1-\theta)^{n-k} \]

fair coin?

\[ NB(n ; k = 7, \theta = 0.5) = \frac{k}{n} \binom{n}{k} \theta^{k} \, (1-\theta)^{n - k}\]

what does it mean to repeat an experiment?

tons of gruesome scenarios:

read more on preregistration and reproducibility

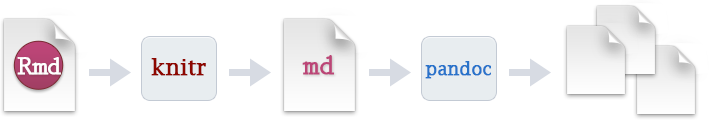

prepare, analyze & plot data right inside your document

export to a variety of different formats

headers & sections

# header 1 ## header 2 ### header 3

emphasis, highlighting etc.

*italics* or _italics_ **bold** or __italics__ ~~strikeout~~

links

[link](https://www.google.com)

inline code & code blocks

`function(x) return(x - 1)`

extension of markdown to dynamically integrate R output

multiple output formats:

cheat sheet and a quick tour

inline equations with $\theta$

equation blocks with

$$ \begin{align*} E &= mc^2 \\

& = \text{a really smart forumla}

\end{align*} $$

caveat

LaTeX-style formulas will be rendered differently depending on the output method:

do it all in one file BDA+CM_HW1_YOURLASTNAME.Rmd

use a header that generate HTML files like this:

--- title: "My flawless first homework set" date: 2017-05-8 output: html_document ---

have all code and plots show at the appropriate place in between your text answers which explain the code and the text

send the *.Rmd and the *.HTML

avoid using extra material not included in the *.Rmd

Tuesday

Friday

obligatory

prepare Kruschke chapters 5 & 6